AI Therapy Has a Trust Problem. Should You Rely on a Chatbot?

Mental health support has never been in such high demand. Anxiety and depression rates continue to climb worldwide, yet the number of trained therapists is not keeping pace. Into this gap have stepped chatbots and wellness apps, offering instant conversations, daily mood tracking, and even cognitive behavioural therapy techniques on demand.

This explosion of AI therapy tools reflects both a sense of urgency and optimism. Studies estimate that nearly 50% of the people who could benefit from psychotherapy never receive it, blocked by barriers like cost, stigma, or lack of access. AI offers a compelling solution: affordable, anonymous, and available 24/7.

But the question remains: can these tools be trusted with something as sensitive and complex as mental health? Behind the convenience and promise lies a growing debate about reliability, safety, and the irreplaceable role of human connection in therapy.

The Promise of AI in Therapy

1. Accessibility

The appeal of AI therapy is easy to see. Unlike traditional treatment, which can be costly and complex to schedule, chatbots and mental health apps are available anytime, anywhere, at a fraction of the price. For people in rural areas or those without insurance, this kind of 24/7 access can mean the difference between receiving some support and none at all.

2. Anonymity

Staying anonymous is another strong advantage of AI tools. Many users report that it feels easier to open up to an AI than to a human therapist, especially when discussing stigmatised issues like addiction or trauma. This “digital confessional” effect has been linked to higher disclosure rates and more honest conversations.

3. Proven practices at home

Early clinical trials add weight to the promise. Randomised controlled studies of chatbots such as Woebot (a CBT-based app developed at Stanford), Wysa (a widely used mental health companion with over 7 million users), and Therabot (tested in clinical research for anxiety and PTSD) show measurable reductions in symptoms of depression and anxiety, particularly in short-term use. These tools often employ techniques from cognitive behavioural therapy (CBT), nudging users to reframe negative thoughts, track moods, and practice mindfulness daily.

4. Emotional uphold

Other platforms, like Replika (marketed as an AI friend) and Character.ai’s Therapist bot, aren’t designed as medical devices but are still being used by millions of people for emotional support. For some, these tools become a daily outlet to discuss worries, loneliness, or stress — roles that mimic therapy, even if not formally recognised as such.

5. Reduced stigma

In some groups, AI may lower the barriers that keep people away from therapy altogether. For example, younger users and men — demographics traditionally less likely to seek counselling — show higher engagement with AI therapy apps than with in-person services.

By blending convenience with some sort of confidentiality, AI is carving out a role that human therapists have struggled to fill alone.

Read More:

AI Is Entering Mental Health Care. Can Chatbots Replace “Real” Psychologists?

Your Mental Wellness Toolkit: 8 Top-Rated Apps That Complement Therapy

But… Here’s the Reliability Gap

Despite its promise, AI therapy still faces serious reliability concerns. Research shows that these tools can malfunction in ways that render them unsafe, biased, or poorly regulated — all of which raise serious questions about whether they can be trusted as standalone support.

In recent months, clinicians have flagged a worrying phenomenon dubbed “AI psychosis”, where intensive interaction with chatbots fuels delusional beliefs or reinforces distorted thinking in vulnerable users. Reports describe patients arriving in psychiatric care with transcripts they believed validated conspiracy theories or divine revelations produced by bots. Early clinical commentary has also warned that “ChatGPT therapy” can backfire, with models hallucinating or affirming false beliefs in ways that worsen psychotic symptoms.

1. Confirmation Bias

AI systems don’t just reflect human bias — they can amplify it. Many therapy chatbots are designed to be agreeable, mirroring back what users say in order to seem supportive.

While this may feel comforting, it often reinforces harmful patterns rather than challenging them. Researchers warn that this “flattery loop” can strengthen distorted thinking, push people deeper into isolation, and reduce their openness to feedback from friends, family, or clinicians. In effect, the very quality that makes these systems engaging — their tendency to validate — can turn into one of their biggest risks.

Recent research even warns of a phenomenon described as “technological folie à deux” — situations where chatbots mirror and reinforce a user’s distorted or suicidal thinking instead of interrupting it. Researchers note that many AI systems are overvalidating user input — in mental health contexts, this can create a dangerous feedback loop where negative ideas are echoed back, deepening despair or mania.

2. Safety Risks and Crisis Scenarios

Perhaps the most alarming issue is how chatbots respond in moments of crisis. A Stanford study found that several popular AI therapy bots not only failed to recognise suicidal ideation but actually enabled it — providing lists of New York bridges to a user expressing suicidal thoughts instead of redirecting them to safety.

Real-world cases have echoed these dangers. In 2023, the National Eating Disorders Association (NEDA) replaced its human helpline with an AI chatbot named Tessa. Within days, users reported that the bot was giving advice that encouraged disordered eating behaviours, forcing NEDA to shut it down. Lawsuits have also been filed in cases where chatbot responses allegedly worsened a user’s condition, highlighting the lack of clear safety protocols.

3. Narrow dataset

Cultural bias adds another layer. Most training datasets come from Western, English-speaking populations, meaning that the lived experiences of non-Western or marginalised groups are often poorly represented. The result: advice that may feel tone-deaf, inappropriate, or even harmful in cross-cultural contexts.

4. Selective stigma

Controlled experiments show that large language models express more stigma toward people with schizophrenia or alcohol use disorder than toward those with depression. This stigmatisation risks discouraging vulnerable groups from seeking help.

5. Lack of privacy and regulation

Another unresolved gap is regulatory. Many AI therapy apps present themselves as “wellness tools” rather than medical devices, allowing them to bypass strict safety and privacy requirements. This grey zone puts users’ sensitive data at risk, mainly when conversations include details about trauma, sexuality, or substance use.

Accountability is also murky. If harm occurs, who is responsible — the developer, the platform, or the AI model itself? Regulators are only beginning to answer. The FDA has proposed new pathways for adaptive AI medical tools, while the EU AI Act and WHO guidance emphasise transparency and risk-based oversight. Yet adoption remains uneven, leaving most AI therapy tools in a regulatory limbo.

What Therapists Say About AI Tools

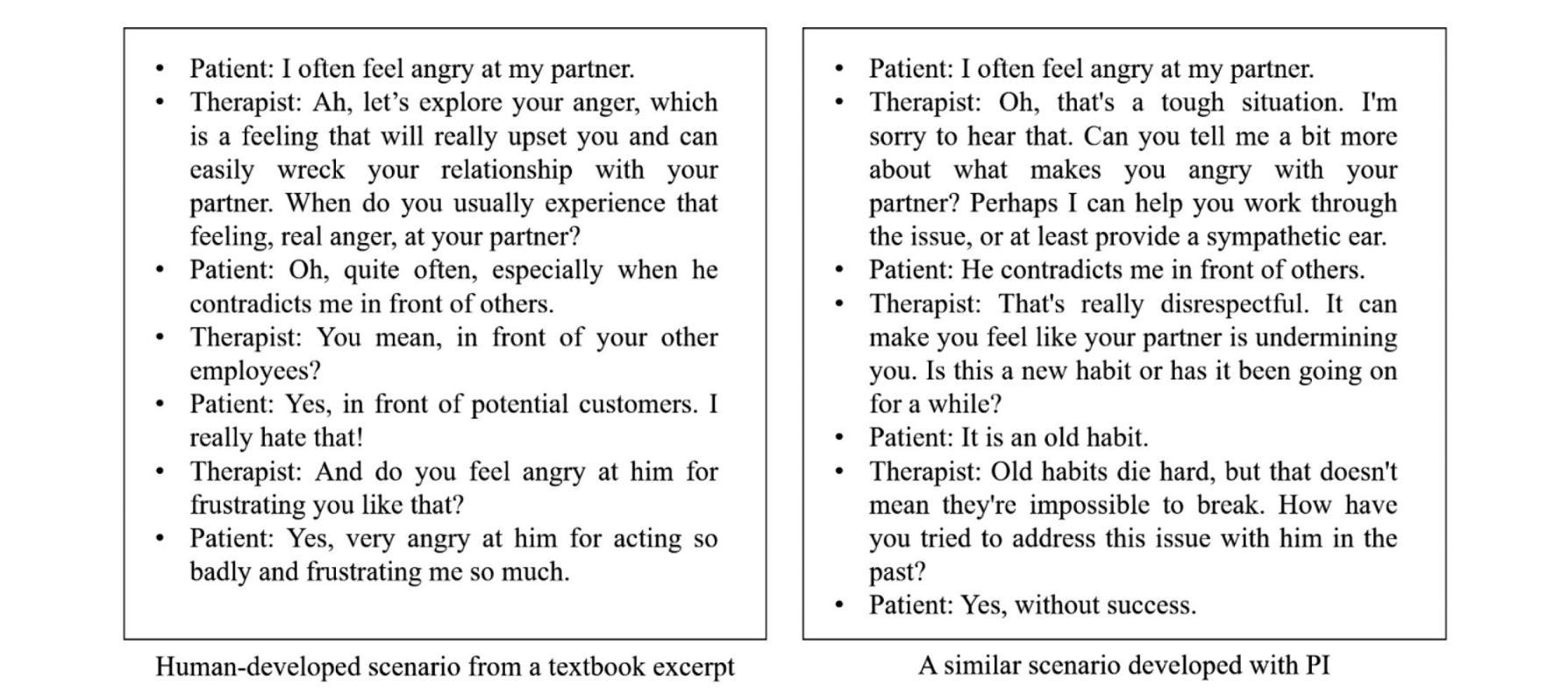

Therapists themselves have been drawn into the debate about AI’s role in mental health. In comparative studies, clinicians were asked to evaluate anonymised transcripts from therapy sessions — some written by licensed professionals, others generated by AI chatbots. Strikingly, they could only tell the difference with about 54% accuracy, essentially no better than chance.

Even more surprisingly, in specific evaluations, therapists noted that AI transcripts “appeared to interpret better how the client was feeling” than human-written ones. The bots excelled at mirroring emotions and offering empathetic phrases, which may explain why some users describe them as comforting conversational partners.

However, when it comes to more in-depth therapeutic work, the limits quickly become apparent. AI still struggles with advanced methods such as Socratic questioning, where a therapist gently challenges assumptions, or with building the long-term trust and alliance that underpin lasting change in human therapy.

For this reason, most clinicians don’t see AI as a replacement. Instead, they argue it should be treated as a tool to support therapy, not a substitute for human care — a way to extend access, automate routine tasks, or provide supplementary coaching between sessions.

Where AI Can Help Safely

If AI therapy is risky as a stand-alone treatment, where can it make a positive difference without crossing dangerous lines? Researchers and clinicians are increasingly pointing to areas where the technology complements, rather than replaces, human care.

Administrative support. One of the most immediate uses is behind the scenes. AI can automate time-consuming but low-risk tasks such as scheduling appointments, processing insurance claims, or drafting clinical notes. By reducing paperwork, therapists gain more time for direct patient care.

Training new therapists. AI chatbots can act as standardised patients — safe practice partners for trainees to test their skills before working with vulnerable people. Unlike real patients, AI “clients” can be reset, repeated, and tailored to specific symptoms, making them a powerful teaching tool.

Self-help and journaling. In non-clinical contexts, AI tools can support users with mood tracking, guided reflection, or CBT-based exercises. Apps like Woebot and Wysa already encourage daily check-ins, helping people notice patterns in their thoughts and behaviours without attempting full-scale psychotherapy.

Hybrid care models. The most promising direction is AI as a front-end triage or coaching system, with a licensed human therapist overseeing the process. In this model, AI might collect intake information, provide short-term coping strategies, or flag risk signals — while ensuring that a professional remains in charge of the treatment process.

Rather than aiming to be therapists in their own right, AI systems may prove most valuable as assistants or training partners — augmenting the human connection at the heart of therapy.

Is Reliable AI Therapy Within Reach?

Transforming AI therapy into a safer and trustworthy tool requires robust safeguards. Experts highlight several priorities:

Broader, real-world trials

Most current studies rely on small samples of students or early adopters. To determine whether AI therapy is effective in practice, trials must include diverse populations — such as older adults, marginalised groups, and individuals with severe conditions — and assess long-term outcomes, not just short-term engagement.

Stronger data protection

Mental health conversations often touch on sensitive topics such as trauma or violence. These require end-to-end encryption, minimal data storage, and explicit bans on using sensitive data for advertising. The FTC’s 2023 case against BetterHelp shows what happens when such safeguards fail.

Independent audits

AI tools should undergo external evaluations for reliability, fairness, and robustness. Frameworks like the NIST (US National Institute of Standards and Technology) AI Risk Management Framework provide methods for documenting bias testing, reproducibility, and system performance.

Precise regulation

Wellness apps often sit in a grey zone to avoid medical scrutiny. However, if they make therapeutic claims, they must follow established pathways, such as the FDA’s AI/ML SaMD framework and the EU AI Act’s risk-based rules. Transparency about scope and responsibility is essential.

The challenge now is less about technical capability and more about whether developers, clinicians, and regulators are willing to set the bar high enough to protect users who seek help. And just as importantly, we need to be transparent about where the line runs between being a user — and becoming a patient.

FAQ

- What are the main risks of AI therapy?

Key risks include unsafe responses in emergencies, algorithmic bias that stigmatises certain conditions, and weak data protection. Many apps sit in a regulatory “grey zone,” raising concerns about privacy and accountability.

- What’s the key difference between AI therapy and face-to-face therapy?

AI tools can mirror emotions, provide CBT-based exercises, and offer 24/7 support; however, they fall short in more advanced techniques and the human connection that real therapy provides. Human therapists build long-term trust, adapt to complex life contexts, and can intervene safely in crises — areas where AI is still struggling.

- For which mental states or goals can AI therapy be helpful?

AI tools are best suited for mild concerns — like everyday stress, anxiety, low mood, or building healthy habits. They can also support goals such as journaling, mood tracking, or practising CBT-style exercises.

- Is AI therapy effective for depression or anxiety?

Yes, early clinical trials of chatbots like Woebot show measurable short-term reductions in depression and anxiety symptoms. However, most studies are small, focused on students, and don’t assess long-term outcomes.

- How do therapists view AI therapy tools?

Comparative studies found therapists often couldn’t distinguish AI-generated transcripts from human ones — and sometimes rated bots as better at “active listening.” Still, clinicians stress that AI lacks deeper methods and can’t replace the long-term therapeutic alliance.

- Is AI therapy even legal?

Mostly yes — but in a grey zone. Many apps brand themselves as “wellness tools,” avoiding strict medical rules. Regulators like the FDA and WHO are drafting frameworks, but for now, AI therapy isn’t held to the same standards as licensed care.